Sri Amit Ray Quantum AI Lab

Quantum Computing Projects

We focused on building strong quantum computing research for the betterment of the Humanity. Quantum machine learning is our key focus area. International collaboration with university, industry and governments are welcome.

Our focus remains on innovation to improve human conditions at each step we walk. We are always open to new ideas. Sri Amit Ray Quantum AI Lab research uses quantum theory to develop technologies that can bring betterment in human lives.

Here, we focus mostly on the applications and the algorithms for quantum machine learning, quantum deep learning, quantum neural networks and quantum deep reinforcement learning, quantum-classical interfaces for our human-centered projects.

Our research includes the emerging research fields of AI and quantum computing such as compassionate care-giving, compassionate health care, multi-omics data integration, precision medicine, Quantum Computing, quantum machine learning, quantum digital medicine, brain-computer interface, combating antibiotic resistant bacteria, Balance Control of Elderly People, computer aided drug design, AI to fight Antimicrobial Resistance etc. Compassionate AI motivates the systems to go out of their way to help the physical, mental or emotional pains of the humanity.

Compassionate artificial intelligence systems are increasingly required for looking after those unable to care for themselves, especially sick, physically challenged persons, children or elderly people. How AI and quantum computer can help the emotional, social, and spiritual needs of humanity; specially for the poor, patients and elderly people are the scope of our research work.

Our Key Quantum Computing Projects

Our research lab is fully compatible with the current development of research on Quantum Computing. We focused our research activities on Quantum Artificial Intelligence. There are three approaches to quantum computing: Gate-based Quantum Computing, Quantum Annealing (QA) and the Adiabatic quantum computation (AQC). Here, we focus mostly on quantum annealing implementation of the algorithms for quantum deep neural learning and quantum deep reinforcement learning for our human-centered projects.

Roadmap for 1000 Qubits Fault-tolerant Quantum Computers

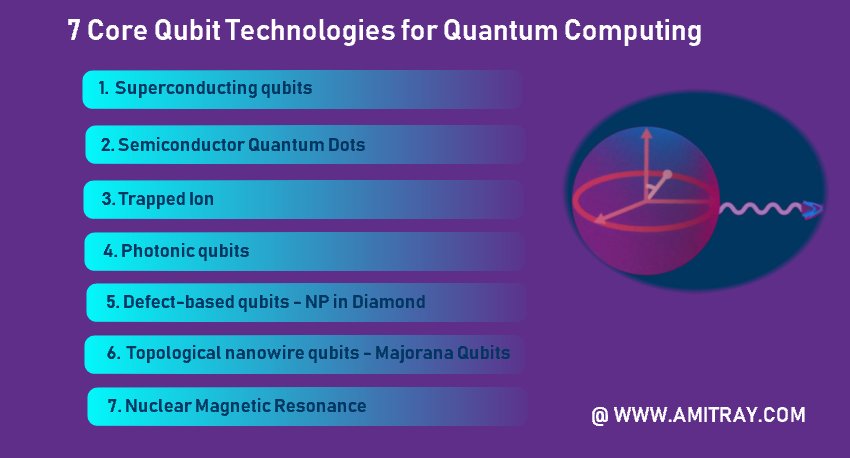

7 Core Qubit Technologies for Quantum Computing

Quantum Computer Algorithms for AI

Spin-orbit Coupling Qubits for Quantum Computing and AI

The 10 Key Properties of Future of Quantum Machine Learning

- Knowledge: Basic ML (kernels, NNs, transformers). No quantum required.

- Hardware/Software: Python 3.12+, Jupyter Notebook. Run on local simulator; for real devices, use IBM Quantum (free tier).

- Installation: Run

pip install qiskit qiskit-ibm-runtime qiskit-machine-learning qiskit-algorithms numpy matplotlib scikit-learn torch. - Resources: Use Jupyter for interactivity—cells for code, Markdown for notes.

- Tips: Start with simulators (Aer backend) to avoid noise. Track qubit limits (NISQ: 5-20 qubits). Debug: Print circuit diagrams with

qc.draw(). - Open Jupyter: New notebook.

- Import:

from qiskit import QuantumCircuit, Aer, execute; from qiskit.visualization import plot_histogram. - Create circuit:

qc = QuantumCircuit(1); qc.h(0); qc.measure_all()(H + measure). - Simulate:

backend = Aer.get_backend('qasm_simulator'); job = execute(qc, backend, shots=1024); result = job.result(); plot_histogram(result.get_counts(qc)). - Expected: Histogram ~50% '0', 50% '1'. Explanation: H puts qubit in equal superposition; measurement randomizes.

- Tip: Increase shots for smoother probs. Visualize: Saves plot as PNG.

- Purpose: Load vector into state amplitudes—compact for ML data.

- Code (2D vector [0.6, 0.8]):

- Run: Fidelity check—

np.abs(np.dot(state.data[:2], np.conj(data / norm)))>0.95. - Explanation: RY rotates to set probabilities; RZ adds phases. For longer vectors, need more qubits (curse: 2^10=1024 dims →10 qubits).

- Tip: For images (MNIST), flatten and normalize pixels.

- Purpose: For matrix ops in ML (e.g., kernel Gram matrices).

- Code (2x2 matrix):

- Simulate: Extract submatrix from

<0| U |0>via partial trace. - Explanation: Ancilla selects rows; controls embed elements. QSVT applies functions (e.g., inverse).

- Tip: For large matrices, use sparse block encoding—reduces gates.

- Probabilistic outputs: Average 1000+ shots.

- Qubit limits: Encode low-D first (2-4 dims).

- Applications: Prep data for kernels (Step 2).

- Extend to QQ: Encode quantum states for tomography.

- Import:

from qiskit.circuit.library import ZZFeatureMap; from qiskit_machine_learning.kernels import QuantumKernel. - Create:

feature_map = ZZFeatureMap(2, reps=2, entanglement='linear'); qkernel = QuantumKernel(feature_map, quantum_instance=Aer.get_backend('statevector_simulator')). - Explanation: ZZ adds entangling ZZ gates—captures correlations.

- Tip: Reps=1 for NISQ; increase for expressivity.

- Data:

X = np.random.rand(4,2)(4 samples, 2 features). - Compute:

K = qkernel.evaluate(X)→ 4x4 PSD matrix. - Visualize:

import matplotlib.pyplot as plt; plt.imshow(K); plt.colorbar(). - Explanation: Diagonal=1 (normalized); off-diag=similarity.

- Load:

from sklearn.datasets import load_digits; digits = load_digits(); X = digits.data[:100] / 255; y = (digits.target % 2).astype(int)(binary, small set). - Split:

from sklearn.model_selection import train_test_split; X_train, X_test, y_train, y_test = train_test_split(X[:, :4], y, test_size=0.3) # 4 features for 2 qubits. - Train QSVM:

from sklearn.svm import SVC; qsvc = SVC(kernel=qkernel.evaluate); qsvc.fit(X_train, y_train); print(qsvc.score(X_test, y_test))(~85% acc). - Compare: Train classical RBF SVM—quantum may edge out on non-linear data.

- Explanation: Kernel trick + quantum map = hybrid classifier. For full MNIST, use 8 qubits (64 features).

- Tip: Downsample images; use PCA for dim reduction.

- Scalability: 10 samples max on NISQ. Use batch eval.

- Applications: Anomaly detection (quantum data), finance (kernels for portfolios).

- Imports:

from qiskit.circuit.library import RealAmplitudes; from qiskit_machine_learning.algorithms import VQC; from qiskit_algorithms.optimizers import COBYLA. - Ansatz:

ansatz = RealAmplitudes(4, reps=3) # RY + CX layers(trainable rotations + entangle). - Setup:

optimizer = COBYLA(maxiter=100); vqc = VQC(ZZFeatureMap(4), ansatz, optimizer, Aer.get_backend('qasm_simulator')). - Train:

vqc.fit(X_train, y_train); print(vqc.score(X_test, y_test)). - Explanation: Feature map encodes; ansatz classifies via measurements. Optimizer tunes params via loss (e.g., cross-entropy).

- Tip: Monitor loss—plot with Matplotlib. For multiclass, use one-vs-rest.

- Purpose: Generate images (e.g., MNIST digits) via quantum generator.

- Imports:

import torch; from torch import nn(hybrid). - Generator Class:

- Explanation: Gen produces quantum samples; Disc classifies real/fake. Alternate training for adversarial learning.

- Full Repo: Use on MNIST 4x4 patches—visually inspect outputs.

- Tip: Noise limits: 100 shots/iter. Mitigate with error correction sims.

- Plateaus: Limit reps<5; use SPSA optimizer for noisy gradients.

- Applications: qCNNs for vision, qGANs for molecules (chemistry sims).

- Imports:

from qiskit.circuit.library import EfficientSU2. - Code:

- Explanation: CX entangles for correlations; measurements give weights.

- Tip: For full QSVT, use advanced libs (repo has impl).

- Stack: Attention + Quantum FFN (RealAmplitudes) + Residual (add states via CNOT).

- Repo Demo: Tokenize text (e.g., "cat dog"), encode → QC → Classify sentiment (acc~80% on small NLP).

- Explanation: Inference: Forward pass on quantum device; train classically.

- Tip: Seq len=4-8 max. Benchmark time vs. classical Transformer.

- Qubit overhead: Embed seq in log N qubits.

- Applications: Quantum NLP (text class), vision (qViT for images).

- Eval:

from sklearn.metrics import accuracy_score; preds = vqc.predict(X_test); print(accuracy_score(y_test, preds)). - Optimize: Swap COBYLA for Adam (

from qiskit_algorithms import SPSAfor noisy). - Fidelity:

from qiskit.quantum_info import state_fidelity; print(state_fidelity(target_state, actual_state)). - Cloud:

from qiskit_ibm_runtime import QiskitRuntimeService; service = QiskitRuntimeService(); backend = service.least_busy(operational=True, simulator=False). - Noise Mitigation:

from mthree import M3Mitigation; mit = M3Mitigation(backend); counts = mit.apply_correction(raw_counts). - Benchmark: Time QSVM vs. SVM on 100 samples.

- Explanation: Start sim, move to 127-qubit IBM (2025 access).

git clone https://qml-tutorial.github.io/; cd qml-tutorial; jupyter notebook.- Run: MNIST kernel, GAN, Transformer demos.

- Noise: 10-20% error—use mitigation.

- Applications: Finance (qKernels for risk), health (qGANs for synth data).

- Full code at https://qml-tutorial.github.io/.

- Quantum Machine Learning: A Hands-on Tutorial for Machine Learning Practitioners and Researchers" (arXiv:2502.01146v1, February 3, 2025).

- Ray, Amit. "Spin-orbit Coupling Qubits for Quantum Computing and AI." Compassionate AI, 3.8 (2018): 60-62. https://amitray.com/spin-orbit-coupling-qubits-for-quantum-computing-with-ai/.

- Ray, Amit. "Quantum Computing Algorithms for Artificial Intelligence." Compassionate AI, 3.8 (2018): 66-68. https://amitray.com/quantum-computing-algorithms-for-artificial-intelligence/.

- Ray, Amit. "Quantum Computer with Superconductivity at Room Temperature." Compassionate AI, 3.8 (2018): 75-77. https://amitray.com/quantum-computing-with-superconductivity-at-room-temperature/.

- Ray, Amit. "Quantum Computing with Many World Interpretation Scopes and Challenges." Compassionate AI, 1.1 (2019): 90-92. https://amitray.com/quantum-computing-with-many-world-interpretation-scopes-and-challenges/.

- Ray, Amit. "Roadmap for 1000 Qubits Fault-tolerant Quantum Computers." Compassionate AI, 1.3 (2019): 45-47. https://amitray.com/roadmap-for-1000-qubits-fault-tolerant-quantum-computers/.

- Ray, Amit. "Quantum Machine Learning: The 10 Key Properties." Compassionate AI, 2.6 (2019): 36-38. https://amitray.com/the-10-ms-of-quantum-machine-learning/.

- Ray, Amit. "Quantum Machine Learning: Algorithms and Complexities." Compassionate AI, 2.5 (2023): 54-56. https://amitray.com/quantum-machine-learning-algorithms-and-complexities/.

- Ray, Amit. "Neuro-Attractor Consciousness Theory (NACY): Modelling AI Consciousness." Compassionate AI, 3.9 (2025): 27-29. https://amitray.com/neuro-attractor-consciousness-theory-nacy-modelling-ai-consciousness/.

- Ray, Amit. "Modeling Consciousness in Compassionate AI: Transformer Models and EEG Data Verification." Compassionate AI, 3.9 (2025): 27-29. https://amitray.com/modeling-consciousness-in-compassionate-ai-transformer-models/.

- Ray, Amit. "Hands-On Quantum Machine Learning: Beginner to Advanced Step-by-Step Guide." Compassionate AI, 3.9 (2025): 30-32. https://amitray.com/hands-on-quantum-machine-learning-beginner-to-advanced-step-by-step-guide/.

- Ray, Amit. "Implementing Quantum Generative Adversarial Networks (qGANs): The Ultimate Guide." Compassionate AI, 3.9 (2025): 60-62. https://amitray.com/implementing-quantum-generative-adversarial-networks-qgans-ultimate-guide/.

- Ray, Amit. "Sri Amit Ray Consciousness Factor (ε) — Integrating Matter-Energy and Consciousness." Compassionate AI, 4.11 (2025): 75-77. https://amitray.com/ray-consciousscious-factor-matter-energy-consciousness/.

- Ray, Amit. "Designing Compassionate AI Consciousness with the Sri Amit Ray Consciousness Factor (ε)." Compassionate AI, 4.11 (2025): 81-83. https://amitray.com/compassionate-ai-consciousness-with-the-ray-consciousness-factor/.

- Ray, Amit. "Quantum Emotion Spaces and Adding Consciousness Factor ε in Robotic Consciousness." Compassionate AI, 4.11 (2025): 81-83. https://amitray.com/quantum-emotion-spaces-and-robotic-consciousness/.

- Ray, Amit. "Unconscious–Conscious Emotional Interaction and Consciousness Factor ε in Robotic Consciousness." Compassionate AI, 4.11 (2025): 81-83. https://amitray.com/unconscious-conscious-emotional-interaction-in-robotic-consciousness/.

Implementing Quantum Generative Adversarial Networks (qGANs): The Ultimate Guide

Abstract

Quantum Generative Adversarial Networks (qGANs) represent a cutting-edge fusion of quantum computing and machine learning, leveraging quantum phenomena like superposition and entanglement to model complex data distributions. This guide provides a comprehensive framework for implementing qGANs, tailored for latest Noisy Intermediate-Scale Quantum (NISQ) devices. We outline the theoretical foundations, contrasting qGANs with classical GANs, and detail hybrid quantum-classical architectures that mitigate NISQ limitations.

The guide includes prerequisites, a step-by-step implementation using Qiskit and PyTorch, and a complete code example for a qGAN implementation. We explore optimization techniques, such as noise mitigation and Rényi divergence-based losses, and discuss applications in data augmentation and financial modeling. Challenges like hardware noise, scalability, and training instability are addressed with solutions like tensor networks and quantum kernel discriminators. Supported by verified references, this guide serves as a practical resource for researchers and practitioners in quantum machine learning.

Introduction

Quantum Generative Adversarial Networks (qGANs) merge the generative power of classical Generative Adversarial Networks (GANs) with quantum computing's unique capabilities, such as superposition and entanglement, to model complex data distributions. As of September 2025, qGANs are pivotal in quantum machine learning (QML), offering potential exponential speedups for tasks like data augmentation and quantum state generation. This guide provides a comprehensive roadmap for implementing qGANs, from theoretical foundations to practical deployment on Noisy Intermediate-Scale Quantum (NISQ) devices, drawing on recent advancements [1, 2, 3].

A classical GAN consists of two main components trained in a zero-sum game: a Generator and a Discriminator. In a qGAN, the generator is typically replaced with a Quantum Generator—a parameterized quantum circuit that produces classical data upon measurement. The discriminator often remains a classical neural network, creating a powerful hybrid approach [3].

Read more ..Hands-On Quantum Machine Learning: Beginner to Advanced Step-by-Step Guide

This comprehensive hands-on guide bridges classical machine learning (ML) and quantum computing, emphasizing the QC sector (quantum algorithms for classical data) and QQ sector (quantum algorithms for quantum data). This guide covers foundational principles, key algorithms of quantum machine learning, applications, theoretical aspects (trainability, generalization, complexity), and practical implementations.

Introduction | Step 1: Quantum Basics | Step 2: Quantum Kernels | Step 3: Quantum Neural Networks | Step 4: Quantum Transformers | Step 5: Evaluation & Scaling | Next Steps

Why This Guide? Quantum ML (QML) leverages quantum computers' superposition and entanglement for potential speedups in ML tasks like classification and generation. As of September 10, 2025, quantum hardware (e.g., IBM's 1000+ qubit systems) is advancing, making QML accessible via simulators and cloud devices. This guide is designed for ML experts with no quantum background—start simple and build up.

Overall Structure: Progress from basics to advanced topics. Each step includes detailed explanations, theoretical insights, hands-on code (using Qiskit), and tips for troubleshooting.

Total estimated time: 10-20 hours for basics + demos.

Prerequisites and Setup

Before diving in, ensure you have:

Step 1: Grasp the Basics of Quantum Computing

Quantum ML runs on quantum processors, so master qubits, circuits, and linear algebra here. This step builds intuition: Why quantum? Superposition allows parallel computation (e.g., evaluate 2^N states at once), entanglement correlates qubits exponentially—key for ML's high-dimensional data.

Key Concepts (Detailed Explanation)

Classical Bits vs. Qubits: Classical bits are 0 or 1 (binary). Qubits are in superposition: α|0⟩ + β|1⟩ (complex α, β with |α|^2 + |β|^2 = 1). For N qubits, state space is 2^N-dimensional—exponential scaling enables encoding vast datasets efficiently (e.g., 10 qubits = 1024 states).

Density Matrices: Describe mixed states post-measurement (probabilistic). Useful for noisy quantum data in ML.

Quantum Circuits: Sequence of gates: Hadamard (H) creates superposition, Pauli-X flips (like NOT), CNOT entangles. Universal: Any computation via these.

Read-In/Read-Out: Read-in encodes classical data (e.g., vectors) into quantum states. Read-out measures (collapses wavefunction) to get classical results—probabilistic, so average multiple runs ("shots").

Quantum Linear Algebra: Block encoding embeds matrices into unitaries for ops like inversion (faster than classical for sparse matrices). QSVT transforms singular values—backbone for QML speedups.

Theoretical Foundations (Easy Guide)

Quantum advantage: BQP class (quantum poly-time) contains hard problems (e.g., factoring). NISQ (noisy, current era) vs. FTQC (error-corrected, future). Quantum Volume (VQ): Measures device power; e.g., IBM's 2025 systems hit VQ=2^20.

Why for ML? Classical ML struggles with big data (e.g., GPT training: 355 GPU-years). Quantum: Poly-log time for linear systems (HHL algorithm).

Practical Implementation (Step-by-Step Guide)

Substep 1.1: Setup Qiskit and Basic Qubit

Substep 1.2: Amplitude Encoding (Read-In)

import numpy as np

from qiskit import QuantumCircuit

from qiskit.circuit.library import QFT

from qiskit.quantum_info import Statevector

def amplitude_encode(data):

n_qubits = int(np.ceil(np.log2(len(data))))

qc = QuantumCircuit(n_qubits)

norm = np.linalg.norm(data)

probs = np.abs(data)**2 / norm**2

phases = np.angle(data)

# Simplified: Use RY rotations for probs, phase gates for angles

for i in range(n_qubits):

angle = 2 * np.arcsin(np.sqrt(probs[i % len(probs)]))

qc.ry(angle, i)

if i < len(phases):

qc.rz(phases[i], i)

# QFT for basis states (full impl in repo)

qc.append(QFT(n_qubits).inverse(), range(n_qubits))

state = Statevector.from_instruction(qc)

return qc, state

data = np.array([0.6 + 0j, 0.8 + 0j]) # Complex for phases

qc, state = amplitude_encode(data)

print(state.data) # Approx [0.6/norm, 0.8/norm]Substep 1.3: Block Encoding

from qiskit import QuantumCircuit

A = np.array([[1, 0], [0, 2]]) / np.linalg.norm(A)

qc = QuantumCircuit(3) # 1 ancilla + 2 system

qc.h(0) # Superposition ancilla

# Controlled rotations for A[0,0]

qc.cry(2 * np.arcsin(A[0,0]), 0, 1)

qc.cy(0, 2) if A[0,1] else None # For off-diagonals (extend)

print(qc.draw('mpl')) # Visualize circuitChallenges & Tips

Step 2: Implement Quantum Kernel Methods

Kernels enable non-linear ML by mapping to high-D spaces. Quantum kernels use quantum circuits for potentially infinite-D maps, offering advantages in separability (e.g., for entangled-like data).

Key Concepts (Detailed Explanation)

Classical Kernels: K(x,y) = <φ(x)|φ(y)> (e.g., RBF for similarity). Dual form: Focus on data points, not features—scalable.

Quantum Kernels: U_φ(x) encodes x; K(x,y) = |<U_φ(x)|U_φ(y)>|^2. Feature maps: Angle (data → rotations, easy), Amplitude (dense encoding).

Relation to Classical: Quantum kernels are like classical but in Hilbert space—universal if Haar-random.

Examples: Fidelity kernel (swap test), projection kernels.

Theoretical Foundations (Easy Guide)

Expressivity: Can approximate any kernel (via unitaries). Generalization: Bounds via covering numbers—quantum often better for small samples (O(√d / √N) error, d=2^N).

Trainability: Parameterized maps avoid plateaus if shallow. Complexity: Poly(N) queries for evaluation.

Practical Implementation (Step-by-Step Guide)

Substep 2.1: Build Feature Map

Substep 2.2: Kernel Matrix

Substep 2.3: MNIST Classification

Challenges & Tips

Step 3: Build Quantum Neural Networks (QNNs)

QNNs hybridize quantum circuits (layers) with classical training. They mimic NNs but exploit quantum parallelism for deeper expressivity.

Key Concepts (Detailed Explanation)

Classical Recap: Perceptron (linear classifier) → MLP (non-linear, backprop).

Fault-Tolerant Q-Perceptron: Uses Grover for O(√N) updates (vs. O(N) classical).

NISQ QNNs: Variational: Parameterized gates + classical optimizer. Discriminative (e.g., VQC for classification); Generative (qGANs for data synth).

Theoretical Foundations (Easy Guide)

Expressivity: Approximate any function (Barren Plateaus: Gradients vanish in deep circuits—use shallow). Generalization: Overparametrized QNNs like classical (VC bounds). Trainability: Layer-wise or Gaussian init mitigates plateaus.

Practical Implementation (Step-by-Step Guide)

Substep 3.1: Quantum Classifier (VQC)

Substep 3.2: Quantum Patch GAN

class QuantumGenerator(nn.Module):

def __init__(self, n_qubits=4):

super().__init__()

self.params = nn.Parameter(torch.randn(20)) # 20 trainable angles

def forward(self, noise): # noise: latent vector

qc = QuantumCircuit(n_qubits)

for i, p in enumerate(self.params):

qc.ry(p.item(), i % n_qubits)

qc.h(range(n_qubits)) # Superposition

# Execute & measure (use shots=1 for sample)

backend = Aer.get_backend('qasm_simulator')

result = execute(qc, backend, shots=1).result()

bits = list(result.get_counts().keys())[0] # Binary string → vector

return torch.tensor([int(b) for b in bits[::-1]], dtype=torch.float32)

gen = QuantumGenerator()

disc = nn.Sequential(nn.Linear(4, 16), nn.ReLU(), nn.Linear(16, 1), nn.Sigmoid()) # Classical discriminator

# Train loop (simplified, 50 epochs):

optimizer_g = torch.optim.Adam(gen.parameters(), lr=0.01)

optimizer_d = torch.optim.Adam(disc.parameters(), lr=0.01)

real_data = torch.rand(32, 4) # Toy real patches

for epoch in range(50):

# Train discriminator

fake = torch.stack([gen(torch.rand(4)) for _ in range(32)])

d_loss = nn.BCELoss()(disc(fake), torch.zeros(32,1)) + nn.BCELoss()(disc(real_data), torch.ones(32,1))

optimizer_d.zero_grad(); d_loss.backward(); optimizer_d.step()

# Train generator

fake = torch.stack([gen(torch.rand(4)) for _ in range(32)])

g_loss = nn.BCELoss()(disc(fake), torch.ones(32,1))

optimizer_g.zero_grad(); g_loss.backward(); optimizer_g.step()

print('GAN trained; generate: gen(torch.rand(4))')Challenges & Tips

Step 4: Develop Quantum Transformers

Transformers dominate NLP/CV. Quantum versions quantumize attention for quadratic speedups (O(N) vs. O(N^2)).

Key Concepts (Detailed Explanation)

Classical Transformer: Tokens → Embed → Self-Attention (QKV matrices) → FFN → Residuals/Norm.

Quantum Transformer: Quantum attention: Block-encode Q,K,V; QSVT for scaled dot-products. Quantum FFN: QNN layers. Residuals: Add quantum states.

Theoretical Foundations (Easy Guide)

Runtime: Grover-like for attention—quadratic speedup. Numerical evidence: 2x faster inference on seq len=100.

Practical Implementation (Step-by-Step Guide)

Substep 4.1: Quantum Self-Attention

def quantum_attention(queries, keys, n_qubits=8):

qc = QuantumCircuit(n_qubits)

# Encode Q/K as rotations

for i in range(4): # Half for Q, half K

qc.ry(queries[i] * np.pi, i)

qc.ry(keys[i] * np.pi, i+4)

# Entangle & compute dots (inner product via swap test approx)

for i in range(4):

qc.cx(i, i+4) # Control for similarity

# QSVT placeholder: Use RY for softmax (simplified)

qc.measure_all()

return qc

# Example: Toy seq

queries = np.random.rand(4); keys = np.random.rand(4)

qc = quantum_attention(queries, keys)

backend = Aer.get_backend('qasm_simulator')

result = execute(qc, backend, shots=100).result().get_counts()

print(result) # Prob dist as attention weightsSubstep 4.2: Full Transformer Inference

Challenges & Tips

Step 5: Evaluate, Optimize, and Scale

Assess QML models rigorously; scale from sim to hardware.

Key Concepts (Detailed Explanation)

Metrics: Accuracy, F1; Quantum-specific: Sample complexity (queries), fidelity (state match).

Theoretical Foundations (Easy Guide)

Generalization: Hoeffding inequality for bounds. No-free-lunch: Quantum shines on entangled data.

Practical Implementation (Step-by-Step Guide)

Substep 5.1: Metrics & Optimization

Substep 5.2: Scaling

Substep 5.3: Clone Repo & Run All

Challenges & Tips

Recourses

Next Steps & Conclusion

Mastered basics? Experiment: Hybrid QNNs on custom data. Read appendices (notations, inequalities). Dive into biblio (e.g., Havlíček for kernels). Quantum era: Utility now, advantage soon. Questions? Explore repo issues.

Ethical Note: QML for good—focus on sustainable apps.

References:

Quantum Cheshire Cat Generative AI Model

"Quantum Cheshire Cat Generative AI Model" is a book written by Sri Amit Ray is a groundbreaking exploration into the realm of Quantum Machine Learning, introducing a novel model that integrates the principles of Quantum Cheshire Cat phenomenon and Quantum Generative Adversarial Networks (QGANs). This book also introduced the concepts of Quantum Mirage Data in the field of machine learning for the first time.

Quantum Cheshire Cat Generative AI Model

Quantum Machine Learning: Algorithms and Complexities

Abstract:

This article provides a comprehensive overview of QML algorithms and explores their complexities. It explores the characteristics of quantum data, hybrid quantum-classical models, variational quantum algorithms, quantum-enhanced reinforcement learning, and the difficulties associated with quantum machine learning. Overall, this article provides a valuable resource for researchers and practitioners interested in understanding the algorithms, complexities, and potential of Quantum Machine Learning, shedding light on its current state and future prospects.

Introduction:

Quantum machine learning, also known as QML, is a blooming field of modern artificial intelligence that integrates quantum computing with machine learning. It aims to enhance traditional machine learning algorithms and develop novel computational methods.

This article examines the inner workings of quantum machine learning and related topics. It includes the fundamentals of quantum computing, quantum machine learning algorithms, the characteristics of quantum data, hybrid quantum-classical models, variational quantum algorithms, quantum-enhanced reinforcement learning, and the difficulties associated with quantum machine learning.

"The fusion of quantum computing and artificial intelligence, paving the way for groundbreaking innovation and endless opportunities." - Sri Amit Ray

Quantum Machine Learning: The 10 Key Properties

In this article, we discussed the 10 properties and characteristics of hybrid classical-quantum machine learning approaches for our Compassionate AI Lab projects. Quantum computers with the power of machine learning will disrupt every industry. They will change the way we live in this world and the way we fight diseases, care for old people and blind people, invent new medicines and new materials, and solve health, climate and social issues. Similar to the 10 V's of big data we have identified 10 M's of quantum machine learning (QML). These 10 properties of quantum machine learning can be argued, debated and fine-tuned for further refinements.

Hybrid Classical Quantum Machine Learning

The compassionate AI lab is currently developing a hybrid classical-quantum machine learning (HQML) framework - a quantum computing virtual plugin to build a bridge between the available quantum computing facilities with the classical machine learning software like Tensor flow, Scikit-learn, Keras, XGBoost, LightGBM, and cuDNN.

Presently the hybrid classical-quantum machine learning (HQML) framework includes the quantum learning algorithms like: Quantum Neural Networks, Quantum Boltzmann Machine, Quantum Principal Component Analysis, Quantum k-means algorithm, Quantum k-medians algorithm, Quantum Bayesian Networks and Quantum Support Vector Machines.

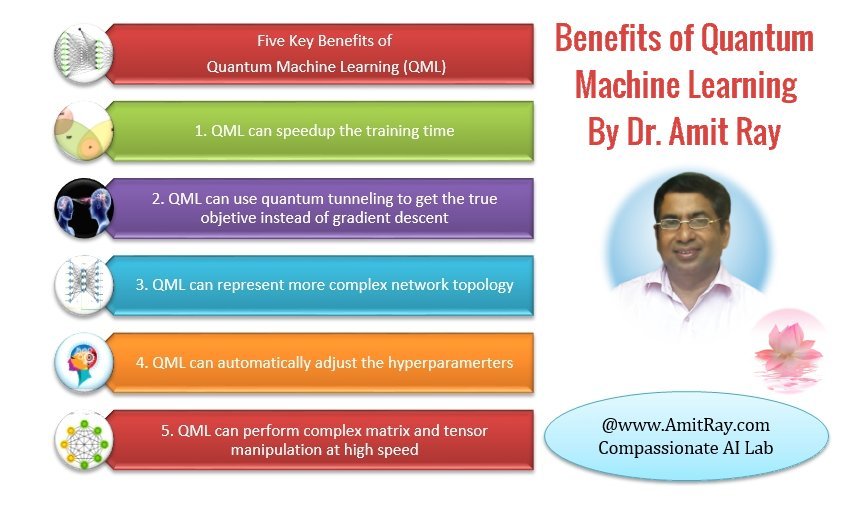

Five Key Benefits of Quantum Machine Learning

Five Key Benefits of Quantum Machine Learning

Here, Dr. Amit Ray discusses the five key benefits of quantum machine learning.

Quantum machine learning is evolving very fast and gaining enormous momentum due to its huge potential. Quantum machine learning is the key technology for future compassionate artificial intelligence. In our Compassionate AI Lab, we have conducted several experiments on quantum machine learning in the areas of drug-discovery, combating antibiotic resistance bacteria, and multi-omics data integration.

We have realized that in the area of drug design and multi-omics data integration, the power of deep learning is very much restricted in classical computer. Hence, with limited facilities, we have conducted many hybrid classical-quantum machine learning algorithm testing at our Compassionate AI Lab.

Five Benefits of Quantum Machine Learning

Roadmap for 1000 Qubits Fault-tolerant Quantum Computers

How many qubits are needed to outperform conventional computers? How to protect a quantum computer from the effects of decoherence? And how to design more than 1,000 qubits fault-tolerant large-scale quantum computers? These are the three basic questions we want to deal in this article.

Qubit technologies, qubit quality, qubit count, qubit connectivity and qubit architectures are the five key areas of quantum computing. In this article, we explain the practical issues of designing large-scale quantum computers.

Quantum Computing with Many World Interpretation Scopes and Challenges

The Many-Worlds Interpretation (MWI) of quantum mechanics posits that all possible outcomes of quantum measurements are realized, each in a separate, non-communicating branch of the universe. This interpretation challenges the traditional Copenhagen view, which involves wave function collapse to a single outcome. In the context of quantum computing, MWI offers a framework for understanding quantum parallelism—the ability of quantum computers to process multiple computations simultaneously.

In this article, we explore how MWI aligns with quantum computing's principles, the opportunities it presents, and the challenges we must address to harness its full potential.

Many scientists believe that Many Worlds Interpretation (MWI) of quantum mechanics is self-evidently absurd for quantum computing. However, recently, there are many groups of scientists increasingly believing that MWI has the real future in quantum computing, because MWI can provide true quantum parallelism. Here, I briefly discuss the scopes and challenges of MWI for future quantum computing for exploration into the deeper aspects of qubits and quantum computing with MWI.

This tutorial is for the researchers, volunteers and students of the Compassionate AI Lab for understanding the deeper aspects of quantum computing for implementing large-scale compassionate artificial intelligence projects.

7 Primary Qubit Technologies for Quantum Computing

7 Core Qubit Technologies for Quantum Computing

Here we discussed the advantages and limitations of seven key qubit technologies for designing efficient quantum computing systems. The seven qubit types are: Superconducting qubits , Quantum dots qubits , Trapped Ion Qubits , Photonic qubits , Defect-based qubits , Topological Qubits , and Nuclear Magnetic Resonance (NMR) . They are the seven pathways for designing effective quantum computing systems. Each one of them have their own limitations and advantages. We have also discussed the hierarchies of qubit types. Earlier, we have discussed the seven key requirements for designing efficient quantum computers. However, long coherence time and high scalability of the qubits are the two core requirements for implementing effective quantum computing systems.

Quantum computing is the key technology for future artificial intelligence. In our Compassionate AI Lab, we are using AI based quantum computing algorithms for human emotion analysis, simulating human homeostasis with quantum reinforcement learning and other quantum compassionate AI projects. This review tutorial is for the researchers, volunteers and students of the Compassionate AI Lab for understanding the deeper aspects of quantum computing qubit technologies for implementing compassionate artificial intelligence projects. We followed a scalable layered hybrid computing architecture of CPU, GPU, TPU and QPU, with virtual quantum plugin interfaces.

Earlier we have discussed Spin-orbit Coupling Qubits for Quantum Computing and AI, Quantum Computing Algorithms for Artificial Intelligence, Quantum Computing and Artificial Intelligence , Quantum Computing with Many World Interpretation Scopes and Challenges and Quantum Computer with Superconductivity at Room Temperature. Here, we will focus on the primary qubit technologies for developing efficient quantum computers.

Building a quantum computer differs greatly from building a classical computer. The core of quantum computing is qubits. Unlike classical bits, qubits can occupy both the 0 and 1 states simultaneously and can also be entangled with, and thus closely influenced by, one another. Qubits are made using single photons, trapped ions, and atoms in high finesse cavities.

Superconducting materials, semiconductor quantum dots are promising hosts for qubits to build scalable quantum processor. However, other qubit technologies have their own advantages and limitations. The details of the seven primary qubit systems are as below:

7 Key Requirements for Quantum Computing

Here, we discussed seven key requirements for implementing efficient quantum computing systems. The seven key requirements are long coherence time, high scalability, high fault tolerance, ability to initialize qubits, universal quantum gates, efficient qubit state measurement capability, and faithful transmission of flying qubits. They are seven guidelines for designing effective quantum computing systems.

Quantum computing is the key technology for future artificial intelligence. In our Compassionate AI Lab, we are using AI based quantum computing algorithms for human emotion analysis, simulating human homeostasis with quantum reinforcement learning and other quantum compassionate AI projects. This tutorial is for the researchers, volunteers and students of the Compassionate AI Lab for understanding the deeper aspects of quantum computing for implementing compassionate artificial intelligence projects.

Earlier we have discussed Spin-orbit Coupling Qubits for Quantum Computing and AI, Quantum Computing Algorithms for Artificial Intelligence, Quantum Computing and Artificial Intelligence and Quantum Computer with Superconductivity at Room Temperature. Here, we will focus on the exact requirements for developing efficient quantum computers.

Building a quantum computer differs greatly from building a classical computer. The core of quantum computing is qubits. Qubits are made using single photons, trapped ions, and atoms in high finesse cavities. Superconducting materials and semiconductor quantum dots are promising hosts for qubits to build a quantum processor. When superconducting materials are cooled, they can carry a current with zero electrical resistance without losing any energy. These seven requirements refereed as DiVincenzo criteria for quantum computing [1].

Quantum Computer with Superconductivity at Room Temperature

Quantum computer with superconductivity at room temperature is going to change the landscape of artificial intelligence. In the earlier article we have discussed quantum computing algorithms for artificial intelligence. In this article we reviewed the implication of superconductivity at room temperature on quantum computation and its impact on artificial intelligence.

Long coherence time (synchronized), low error rate and high scalability are the three prime requirements for quantum computing. To overcome these problems, presently, quantum computer needs complex infrastructure involving high-cooling and ultra-high vacuum. This is to keep atomic movement close to zero and contain the entangled particles, both of which reduce the likelihood of decoherence. The availability of superconductivity at room temperature will provide the quantum jump in quantum computer.

Quantum Computing Algorithms for Artificial Intelligence

Quantum Computing Algorithms for Artificial Intelligence

Dr. Amit Ray explains the quantum annealing, Quantum Monte Carlo Tree Search, Quantum algorithms for traveling salesman problems, and Quantum algorithms for gradient descent problems in depth.

This tutorial is for the researchers, developers, students and the volunteers of the quantum computing team of the Sri Amit Ray Compassionate AI Lab. Many of our researchers and students asked me to explain the quantum computing algorithms in a very simplistic term. The purpose of this article is to explain that.

Earlier we have discussed Spin-orbit Coupling Qubits for Quantum Computing and the foundations of Quantum computing and artificial intelligence. This article is to explain the foundation quantum computing algorithms in depth in a simplistic way. Here we explained the concepts of quantum annealing, Quantum Monte Carlo Tree Search, quantum algorithms for traveling salesman problem and Quantum algorithms for gradient descent problems.

Read more ..Spin-orbit Coupling Qubits for Quantum Computing and AI

The Power of Spin-orbit Coupling Qubits for Quantum Computing

Here, Dr. Amit Ray discusses the power, scope, and challenges of Spin-orbit Coupling Qubits for Quantum Computing with Artificial Intelligence in details. Quantum computing for artificial intelligence is one of the key research projects of Compassionate AI Lab. We summarize here some of the recent developments on qubits and spin–orbit coupling for quantum computing.

In digital computing, information is processed as ones and zeros, binary digits (or bits). The analogue to these in quantum computing are known as qubits. The qubits are implemented in nanoscale dimensions, such as spintronic, single-electron devices and ultra-cold gas of Bose-Einstein condensate state devices. Manipulation and measurement of the dynamics of the quantum states before decoherence are the primary characteristic of quantum computing.

Involving electron spin in designing electronic devices with new functionalities and achieving quantum computing with electron spins is among the most ambitious goals of compassionate artificial superintelligence - AI 5.0. Utilizing quantum effects like quantum superposition, entanglement, and quantum tunneling for computation is becoming an emerging research field of quantum computing based artificial intelligence.

Quantum Computing and Artificial Intelligence

Quantum Computing and Artificial Intelligence

Here, Sri Amit Ray discusses the power, scope, and challenges of Quantum Computing and Artificial Intelligence in details.

In recent years there has been an explosion of interest in quantum computing and artificial intelligence. Quantum computers with artificial intelligence could revolutionize our society and bring many benefits. Big companies like IBM, Google, Microsoft and Intel are all currently racing to build useful quantum computer systems. They have also made tremendous progress in deep learning and machine intelligence.

Artificial intelligence (AI) is an area of science that emphasizes the development of intelligent systems that can work and behave like humans. Quantum computing is essentially using the amazing laws of quantum mechanics to enhance computing power. These two emergent technologies will likely have huge transforming impact on our society in the future. Quantum computing is finding a vital platform in providing speed-ups for machine learning problems, critical to big data analysis, blockchain and IoT.

The main purpose of this article is to explain some of the basic ideas how quantum computing in the context of the advancements of artificial intelligence; especially quantum deep machine learning algorithms, which can be used for designing compassionate artificial superintelligence.

Read more ..