Within our compassionate AI Lab, we have diligently worked to create a series of AI indexes and measurement criteria with the objective of safeguarding the interests of future generations and empowering humanity.

In this article, we present an exploration of ten indispensable ethical AI indexes that are paramount for the responsible AI development and deployment of Large Language Models (LLMs) through the intricate processes of data training and modeling.

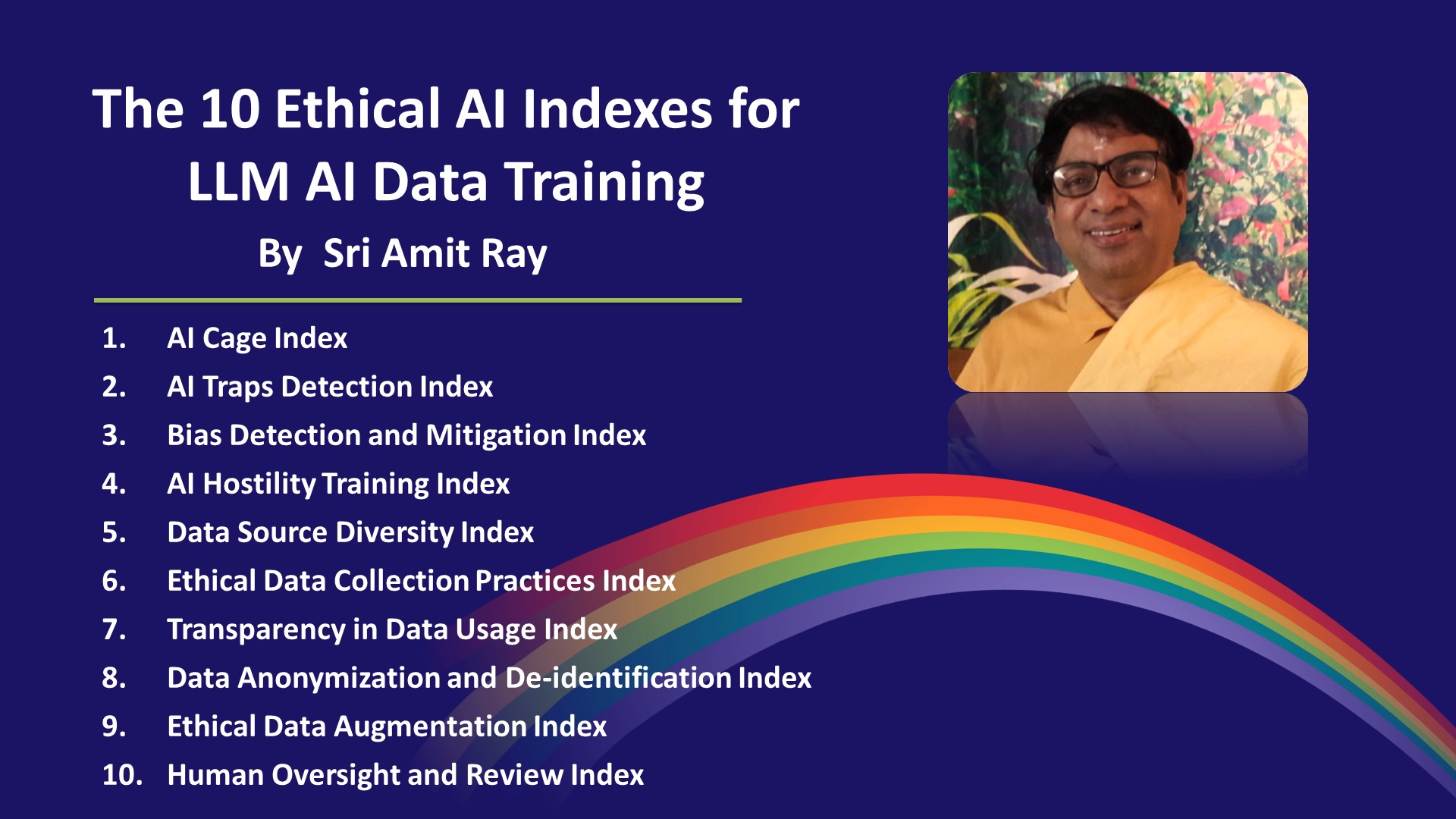

The 10 Ethical AI Indexes For LLM AI Sri Amit Ray Compassionate AI Lab

These measurement criteria are meticulously crafted to address the imperative tasks of mitigating biases, sidestepping deceptive pitfalls, ensuring unwavering transparency, advocating for diverse data sources, and upholding rigorous safety, and ethical standards throughout every facet of AI model evolution. Notably, the AI Hostility Data Training Index stands as a formidable instrument in preventing AI technologies from inadvertently propagating negativity, hostility, or divisiveness within the fabric of our society.

Implementing these indexes is fundamental to fostering AI training processes that prioritize fairness, transparency, and ethical considerations [7]. By adhering to these principles, developers can harness the power of AI to drive positive change while minimizing potential risks and pitfalls.

Introduction

These 10 Ethical Indexes related to AI Data Preparation and Training focus on mitigating biases, avoiding traps, ensuring transparency, promoting diverse data sources, and upholding ethical practices throughout the AI model’s development. Implementing these indexes will help foster responsible AI training processes that prioritize fairness, transparency, and ethical considerations.

In this article, we discussed the ten essential ethical indexes that must be considered during the data training and modeling of Large Language Models (LLMs) to ensure their responsible and beneficial deployment.

AI Cage Index

The AI Cage Index measures the extent to which AI models are confined within narrow or limited datasets, evaluating how diverse and representative the training data is. It ensures that AI systems are not restricted to specific viewpoints or biases, promoting broader perspectives. This index is designed to assess and ensure that AI models are not confined within narrow or limited datasets, but rather exposed to a diverse range of viewpoints, perspectives, and contexts, promoting a more comprehensive and inclusive learning process.

AI Traps Detection Index

The AI Traps Detection Index assesses the AI model’s ability to identify and avoid data traps, such as biased or misleading information, during the training process. It ensures that the model does not inadvertently learn from unreliable or harmful sources. The AI Traps Detection Index contributes to the prevention of harmful content generation by ensuring that the AI model is less likely to produce offensive, misleading, or false information.

Bias Detection and Mitigation Index

The Bias Detection and Mitigation Index evaluate whether the AI model can detect and address biases present in the training data [4]. It ensures that the model does not perpetuate unfair or discriminatory outcomes. It covers ten types of biases in AI data training like: data bias, algorithmic bias, temporal bias, social bias, country bias, race bias, interaction bias, etc.

AI Hostility Data Training Index

The AI Hostility Data Training Index focuses on identifying and mitigating data that promotes hostility, hate speech, or harmful behaviors. It ensures that AI systems do not contribute to the spread of harmful content or facilitate hostile actions. This index is a valuable component of the larger ethical framework necessary for responsible AI development.

In essence, the AI Hostility Data Training Index functions as a safeguard mechanism against the inclusion of content that could lead to negative consequences when integrated into AI models. It evaluates the extent to which the training data is screened, filtered, or curated to eliminate instances of hostility, thereby promoting ethical and responsible AI development.

Data Source Diversity Index

The Data Source Diversity Index measures the variety of sources used in AI data training, ensuring that the model learns from a wide range of perspectives and contexts, reducing the risk of skewed learning. A diverse dataset helps AI models learn from a broader range of perspectives, reducing the risk of bias and ensuring more balanced and accurate outputs. The index assesses how well the training data represents different viewpoints and perspectives. It ensures that no single source dominates the training data, which can lead to bias or limited understanding.

Ethical Data Collection Practices Index

The Ethical Data Collection Practices Index evaluates whether the data used for training the AI model was obtained through ethical means, respecting data subjects’ privacy, and complying with data protection regulations. Developers ensure that data collection adheres to relevant data protection regulations, such as GDPR or HIPAA. Compliance with these regulations helps safeguard data subjects’ rights and ensures that their data is handled responsibly [8].

Transparency in Data Usage Index

The Transparency in Data Usage Index assesses how transparently AI developers communicate the data sources and methodology used for training the model. It ensures that users and stakeholders are aware of the data’s origins and potential biases [5]. Developers thoroughly document the sources of training data, indicating where the data was collected from, its nature, and any potential biases or limitations associated with each source. The index holds developers accountable for the quality and ethical considerations of AI models. Transparent communication encourages responsible behavior and adherence to ethical standards.

Data Anonymization and De-identification Index

The Data Anonymization and De-identification Index measures the extent to which personally identifiable information is removed from the training data to protect individuals’ privacy. The Data Anonymization and De-identification Index assesses how effectively the identifiable information is transformed or removed. Techniques such as pseudonymization, aggregation, and generalization are employed to ensure that the data remains useful for training while reducing the risk of re-identifying individuals.

Ethical Data Augmentation Index

The Ethical Data Augmentation Index evaluates whether the data augmentation techniques used during training maintain the integrity and context of the original data, avoiding the creation of misleading or harmful samples. Data augmentation involves techniques that modify or enhance the training data to improve the model’s performance and generalization capabilities [3]. However, the challenge lies in ensuring that these augmentation techniques do not inadvertently introduce biases, misinformation, or harmful content into the AI model’s learning process.

Human Oversight and Review Index

The Human Oversight and Review Index assesses the extent to which human reviewers are involved in monitoring the AI training process, ensuring ethical decisions and mitigating potential risks. By involving human expertise, judgment, and intervention, developers can ensure that AI systems operate within ethical boundaries, mitigate biases, and align with societal values [10]. This index is a crucial component of responsible AI development, promoting transparency, accountability, and the ethical deployment of AI technologies.

Summary

In conclusion, our compassionate AI Lab’s pursuit has yielded a profound set of AI indexes and measurement benchmarks, dedicated to safeguarding future generations and empowering humanity.

This article has shed light on ten indispensable ethical AI indexes critical to the conscientious development and deployment of Large Language Models (LLMs) through meticulous data training and modeling.

These benchmarks serve as beacons, guiding the mitigation of biases, avoidance of deceptive pitfalls, championing transparency, endorsing diverse data sources, and unwavering adherence to ethical tenets throughout the intricate journey of AI model evolution.

As Large Language Models become an integral part of our technological landscape, it is essential to prioritize ethical considerations during data training and modeling. The ten ethical indexes outlined above provide a comprehensive framework for guiding the development and deployment of LLMs that are transparent, unbiased, inclusive, and accountable.

By adhering to these principles, we can harness the power of AI to drive positive change while minimizing potential risks and pitfalls. Responsible AI development is not just an aspiration; it is an ethical imperative that shapes the future of AI for the better.

By measuring to these indexes, AI developers can contribute to a future where AI-driven technologies enrich society while adhering to the highest ethical standards.

References

- Hagendorff, T. The Ethics of AI Ethics: An Evaluation of Guidelines. Minds & Machines 30, 99–120 (2020). https://doi.org/10.1007/s11023-020-09517-8

- Altuntas E, Gloor PA, Budner P. Measuring Ethical Values with AI for Better Teamwork. Future Internet. 2022; 14(5):133. https://doi.org/10.3390/fi14050133.

- Ray, Sri Amit. “From Data-Driven AI to Compassionate AI: Safeguarding Humanity and Empowering Future Generations.” Amit Ray, June 17, 2023. https://amitray.com/from-data-driven-ai-to-compassionate-ai-safeguarding-humanity-and-empowering-future-generations/.

- Ray, Sri Amit. “Ethical Responsibility In Large Language AI Models.” Amit Ray, July 7, 2023. https://amitray.com/ethical-responsibility-in-large-language-ai-models/.

- Ray, Sri Amit. “Calling for a Compassionate AI Movement: Towards Compassionate Artificial Intelligence” Amit Ray, June 25, 2023. https://amitray.com/calling-for-a-compassionate-ai-movement/.

- Ray, Sri Amit. “Compassionate Artificial Intelligence Scopes and Challenges” Amit Ray, April 16, 2018, https://amitray.com/compassionate-artificial-intelligence-scopes-and-challenges/

- Ray, Sri Amit. Ethical AI Systems: Frameworks, Principles, and Advanced Practices, . Compassionate AI Lab, 2022.

- European Parliamentary Research Service, (2020), The ethics of artificial intelligence: Issues and initiatives

- Kazim E, Koshiyama AS. A high-level overview of AI ethics. Patterns (N Y). 2021 Sep 10;2(9):100314. doi: 10.1016/j.patter.2021.100314. PMID: 34553166; PMCID: PMC8441585.

- Bleher H, Braun M. Reflections on Putting AI Ethics into Practice: How Three AI Ethics Approaches Conceptualize Theory and Practice. Sci Eng Ethics. 2023 May 26;29(3):21. doi: 10.1007/s11948-023-00443-3. PMID: 37237246; PMCID: PMC10220094.

Download the PDF File: The 10 Ethical AI Indexes For LLM AI Data Training and Modelling