Abstract

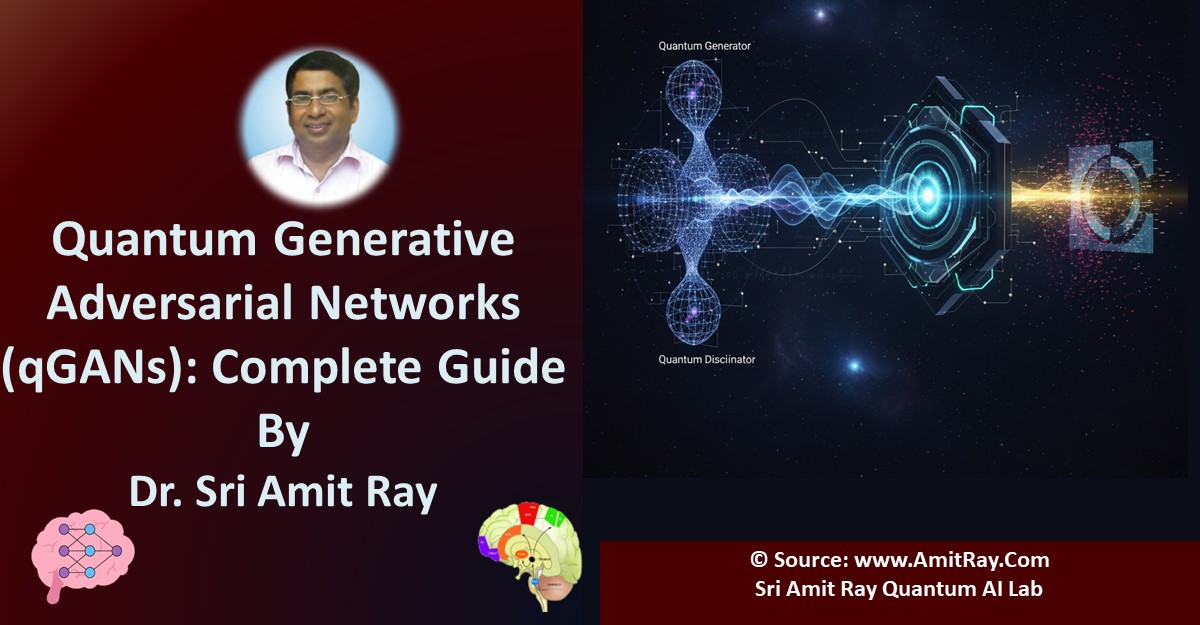

Quantum Generative Adversarial Networks (qGANs) represent a cutting-edge fusion of quantum computing and machine learning, leveraging quantum phenomena like superposition and entanglement to model complex data distributions. This guide provides a comprehensive framework for implementing qGANs, tailored for latest Noisy Intermediate-Scale Quantum (NISQ) devices. We outline the theoretical foundations, contrasting qGANs with classical GANs, and detail hybrid quantum-classical architectures that mitigate NISQ limitations.

The guide includes prerequisites, a step-by-step implementation using Qiskit and PyTorch, and a complete code example for a qGAN implementation. We explore optimization techniques, such as noise mitigation and Rényi divergence-based losses, and discuss applications in data augmentation and financial modeling. Challenges like hardware noise, scalability, and training instability are addressed with solutions like tensor networks and quantum kernel discriminators. Supported by verified references, this guide serves as a practical resource for researchers and practitioners in quantum machine learning.

Introduction

Quantum Generative Adversarial Networks (qGANs) merge the generative power of classical Generative Adversarial Networks (GANs) with quantum computing's unique capabilities, such as superposition and entanglement, to model complex data distributions. As of September 2025, qGANs are pivotal in quantum machine learning (QML), offering potential exponential speedups for tasks like data augmentation and quantum state generation. This guide provides a comprehensive roadmap for implementing qGANs, from theoretical foundations to practical deployment on Noisy Intermediate-Scale Quantum (NISQ) devices, drawing on recent advancements [1, 2, 3].

A classical GAN consists of two main components trained in a zero-sum game: a Generator and a Discriminator. In a qGAN, the generator is typically replaced with a Quantum Generator—a parameterized quantum circuit that produces classical data upon measurement. The discriminator often remains a classical neural network, creating a powerful hybrid approach [3].

Read more ..